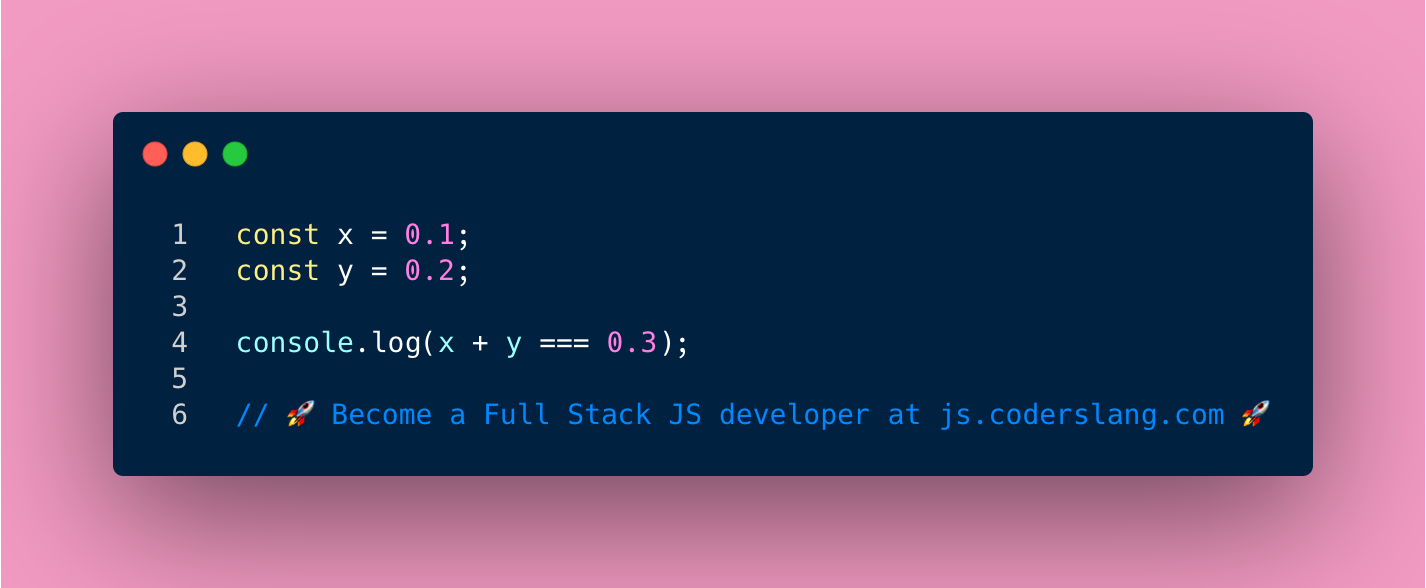

JavaScript math is weird. What’s the output? True or false?

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Inside the computer, all numbers are stored in the Binary Number System.

To keep it simple, it’s the sequence of bits - which are “digits” that can be either 0 or 1.

The number 0.1 is the same as 1/10 which can be easily represented as a decimal number. In binary, it will result in an endless fraction, similar to what 1/3 is in decimal.

All numbers in JavaScript are stored as 64-bit signed floating-point values, and when there’s not enough space to hold the value, the least significant digits are rounded.

This leads us to the fact that in JavaScript 0.1 + 0.2 render 0.30000000000000004 and not 0.3 like you would have obviously thought.

There’s a whole lecture dedicated to the Binary Number System in my Full Stack JS course CoderslangJS.

ANSWER: false will be printed on the screen.